허깅 페이스🤗 transformers 라이브러리는 다양한 딥러닝 모델과 데이터셋을 간편하게 사용할 수 있어 널리 사용되는 패키지이다. transformers 라이브러리의 메인은 바로 모델 훈련을 위한 Trainer 함수라 할 수 있는데, 모델 훈련을 위한 정말 많은 기능들을 제공하고 있다. 그런데 이렇게 많은 기능들을 전부 설정하려면 엄청나게 많은 파라미터들을 인자로 받아야 한다. (필자를 포함해) 많은 사람들이 허깅페이스 사용을 어려워하는 이유이다.

이 글에서는 먼저 허깅페이스 Trainer 클래스의 사용법을 알아본다. 특히 자주 사용하는 인자들로 어떤 것이 있는지, 어떤 방식으로 Trainer에 넣어줘야 하는지를 알아본다. 또, GPT-2 모델을 IMDB 데이터셋으로 fine-tuning시켜서 영화 리뷰 분류에 실제로 적용해본다.

허깅페이스란?

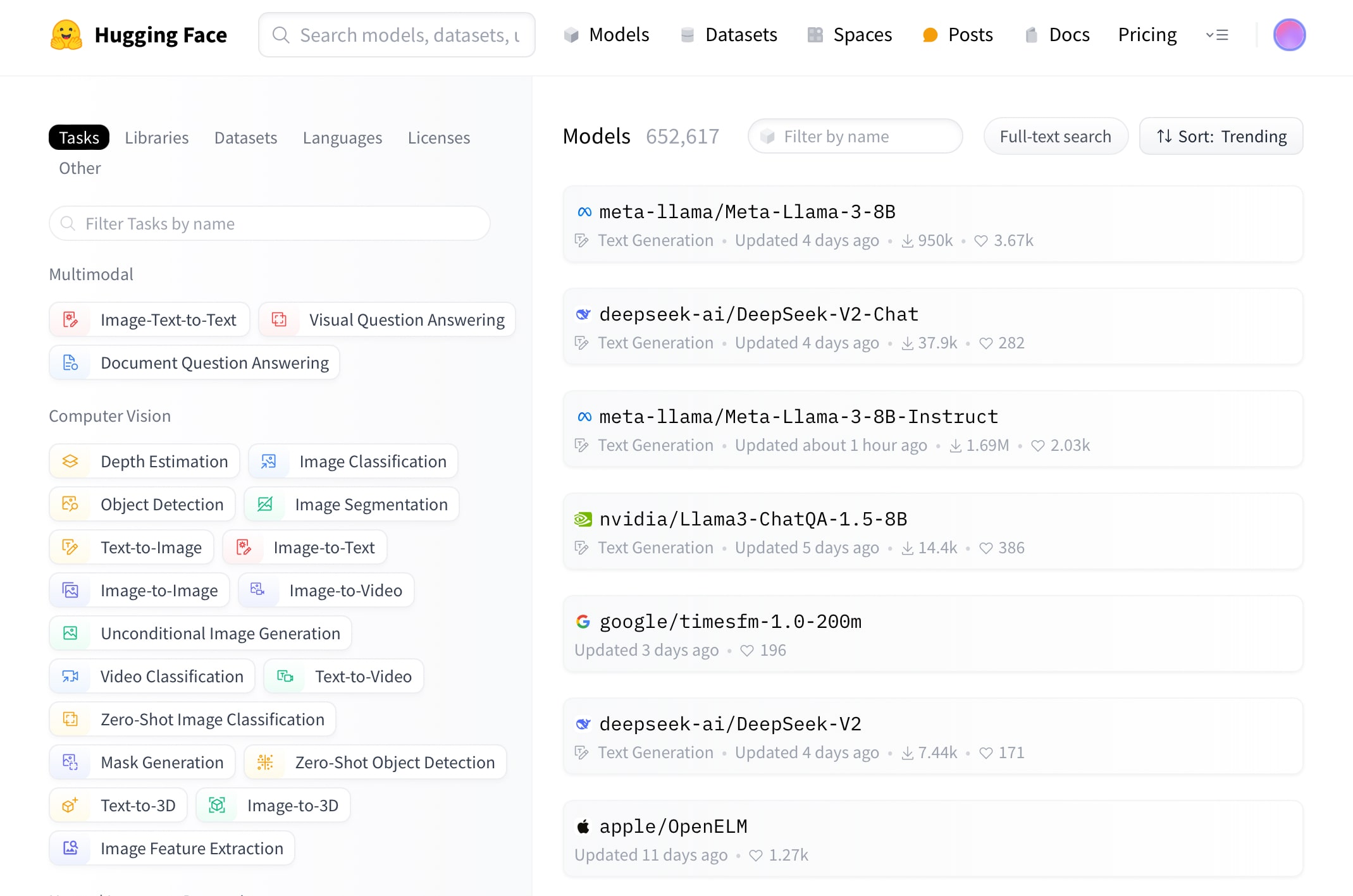

허깅페이스(HuggingFace) 🤗는 머신러닝/딥러닝 모델을 훈련, 공유하고 배포하기 위한 서비스들을 제공하는 회사이자 커뮤니티이다. 허깅페이스에서 제공하는 서비스는 크게 두 개로 나뉠 수 있다.

-

모델과 데이터셋 등을 공유할 수 있는 HuggingFace Hub

사전학습된 모델들과 데이터셋들이 잘 정리되어 있고, 오픈소스로 쉽게 다운받고 사용할 수 있도록 되어있다.

-

모델을 훈련시키기 위한 파이썬의 transformers 라이브러리

허브에 공개된 사전학습된 모델과 데이터셋을 쉽게 가져올 수 있고, 모델 훈련 또한 쉽게 할 수 있도록 API를 제공한다.

이 글에서 다루는 것은 이 중 전자로, 허깅페이스에서 제공하는 transformers 라이브러리의 사용법을 알아보겠다.

Trainer와 TrainingArguments의 매개변수들

허깅페이스 Trainer API를 이용해서 모델을 훈련할 때, 매개변수들 중 일부는 TraininingArguments로, 일부는 Trainer에 넣어주어야 한다. 예시를 들자면 다음과 같다.

from transformers import Trainer, TrainingArguments

training_arguments = TrainingArguments(

output_dir='./results',

evaluation_strategy="epoch",

num_train_epochs=3,

per_device_train_batch_size=16,

per_device_eval_batch_size=32,

learning_rate=3e-5,

logging_strategy="epoch",

load_best_model_at_end=True,

save_strategy="epoch",

metric_for_best_model="accuracy",

)

trainer = Trainer(

model=AutoModelForSequenceClassification.from_pretrained(”bert-base-uncased”),

train_dataset=ds_train,

eval_dataset=ds_test,

args=training_arguments,

compute_metrics=compute_metrics,

)

trainer.train()

이렇게 TrainingArguments 객체를 하나 만든 후, 이를 다시 Trainer에 args 매개변수로 넣어주는 식으로 training에 필요한 정보들을 알려주어야 한다. 먼저 TrainingArguments가 받는 매개변수들부터 살펴보자.

TrainingArguments

공식 API 문서에서 TrainingArguments를 찾아보면 argument들의 매우 긴 목록이 나온다. 이 중 자주 쓰이는 몇 개를 정리해보았다.

-

output_dir: str: 유일한 required argument로, 훈련된 모델과 체크포인트를 어디에 저장할지를 의미한다. 파일시스템 상의 경로로 지정하거나(e.g. ‘./results’) 허깅페이스 허브에 저장할 repository 이름으로 설정할수도 있다 (e.g. ’bert-uncased-imdb-finetuned’). 후자의 경우 트레이닝 후 trainer.push_to_hub()를 하면 자동으로 허브에 업로드된다.

overwrite_output_dir: bool: True로 설정시, output_dir에 이미 파일이 존재하는 경우에도 덮어쓰기를 한다.

-

num_train_epochs: 훈련할 에포크(epoch)의 수이다.

per_device_train_batch_size: train 시의 batch size를 지정해준다.per_device_eval_batch_size: evaluation 시의 batch size를 지정해준다.-

learning_rate: learning rate(학습률)을 지정해준다.

lr_scheduler_type: learning rate scheduler를 사용하고 싶은 경우 지정해줄 수 있다. default는 당연히 linear이고, constant, cosine, cosine_with_restarts, polynomial, constant_with_warmup 등을 사용할 수 있다.constant가 아닌 LR scheduler를 사용할 시 추가적으로 파라미터를 넣어주어야 한다.

-

weight_decay: L2 weight decay를 설정한다.

-

logging_strategy: 로그를 어떻게 남길지 설정한다. 기본값은 steps로, no로 설정하면 로그를 남기지 않으며 epoch로 설정하면 한 에포크가 끝날 때마다, steps로 설정하면 매 logging_steps마다 로그를 남기게 된다.

- 즉,

logging_strategy=‘steps’로 설정시 logging_steps를 같이 설정해주어야 한다. logging_steps는 정수를 넣어줄 수도 있지만 0에서 1 사이의 float 값으로 지정할 수도 있는데, 이 경우 전체 training step에 이를 곱한 값을 사용한다.

-

save_strategy: 모델 체크포인트를 언제 저장할지 설정한다. 마찬가지로 no, steps, epoch 중 하나를 고를 수 있으며 steps로 설정되면 save_steps를 같이 설정해주어야 한다.

save_total_limit: 최대로 저장할 수 있는 모델 체크포인트의 수를 지정해준다.

-

evaluation_strategy: evaluation을 언제 수행할지를 결정한다. 마찬가지로 no, steps, epoch 중 하나를 고를 수 있으며 steps로 설정되면 evaluation_steps를 같이 설정해주어야 한다.

use_cpu: True로 설정할 시 사용가능한 GPU가 있어도 CPU에서 실행한다.seed: 랜덤 시드를 설정해준다. 많은 경우 42를 사용한다. fp16: fp16 mixed-precision을 사용할지 여부를 True/False로 설정해준다. disable_tqdm: False로 설정시, 표 대신 tqdm을 사용해서 progress bar를 표시해준다.-

load_best_model_at_end: True로 설정하면 훈련이 끝났을 때 가장 성능이 좋은 모델을 로드해준다.

metric_for_best_model: 이때 ‘성능이 좋다’는 것의 기준을 무엇으로 삼을지 지정해준다. 후술할 compute_metrics 함수에서 반환하는 metric 중 하나의 이름을 string으로 넣어주면 된다 (e. g. “accuracy”)greater_is_better: 해당 metric이 높을 수록 좋은 것인지, 낮을수록 좋은 것인지를 알려준다.

Trainer

TrainingArguments에서 학습을 시킬 때 알려줘야 할 세부사항들을 넣어주었다면, Trainer에서는 좀 더 기본적인 굵직한 정보들을 알려주어야 한다.

-

model: transformer 모델 객체나 PyTorch의 nn.Module 객체를 넣어주면 된다.

model 대신에, 새로운 모델 객체를 하나 만들어 반환하는 함수인 model_init을 제공해줄 수도 있다.

-

args: 앞서 소개한 TrainingArguments 객체이다.

data_collator: train/evaluation dataset에 있는 원소들의 list를 묶어서 batch로 만들어주기 위해 사용되는 함수이다. tokenizer를 지정해주지 않은 경우 default_data_collator()가 사용되고, 지정해준 경우는 DataCollatorWithPadding의 인스턴스가 사용된다.train_dataset: 가장 중요하다고 할 수 있는 훈련용 데이터셋을 지정해준다. transformer의 dataset.Dataset일수도, PyTorch의 Dataset일 수도 있다.eval_dataset: Evaluation용 데이터셋을 지정해준다. 형식은 train_dataset과 같다.tokenizer: 데이터를 전처리하기 위한 토크나이저를 지정해준다. compute_metrics: Evaluation시에 metric들을 계산해주기 위한 함수이다. 함수의 입력과 출력은 특정 형식을 따라야만 하는데, 이는 뒤에서 마저 설명하겠다.optimizers: 훈련에 사용할 optimizer와 LR scheduler를 지정해준다. torch.optim.Optimizer 객체와 torch.optim.lr_scheduler.LambdaLR 객체의 tuple을 요구한다. 아무것도 입력하지 않으면 AdamW를 사용한다.

복잡한 형식을 따라야 하는 인자들

앞에서 설명한 TrainingArguments와 Trainer의 매개변수들 중에서는 숫자나 문자열이 아닌, 함수나 특정 클래스의 객체를 요구하는 것들이 있다. 이 때 인자로 주어지는 함수는 당연히 특정한 입력과 출력 형식을 따라야만 할 것이고, 객체는 당연히 정해져 있는 특정 클래스의 객체여야만 할 것이다. 그러지 않으면 에러가 발생하게 된다. 설명한 것 인자들 중에서는 data_collator와 compute_metrics가 이러한 경우에 해당하는데, 각각 어떤 형식을 따라야 하는지 간략하게 알아보자.

data_collator

data_collator는 앞서 설명했듯이 데이터를 batch로 묶어 model에 전달할 수 있는 형태로 만들어주며, DataCollator 클래스의 인스턴스가 되도록 정해져 있다. DataCollator 클래스는 여러 자식 클래스를 가지는데, 어떤 task를 수행하느냐에 따라 다른 것을 사용하면 된다.

DataCollatorWithPadding: 입력된 시퀀스를 길이가 동일해지도록 패딩하여 batch를 만든다. 텍스트 분류와 같은 작업에 사용한다.DataCollatorForSeq2Seq: sequence-to-sequence 작업 (e.g. 번역, 요약)을 위한 클래스이다. 이 경우 source와 target sequence를 모두 패딩해준다.DataCollatorForLanguageModeling: masked language modeling(MLM)과 같이 언어 모델링(language modeling) task에 사용된다.

* mlm_probability로 토큰을 마스킹할 확률을 지정해줄 수 있다.DataCollatorForTokenClassification: 토큰 분류 작업 (예: NER)을 위한 배치를 만들어준다.DefaultDataCollator: 특별한 전처리 없이 데이터를 배치로 묶기만 하는 data collator로, Trainer 클래스의 기본값이다.

compute_metrics

Evaluation 시에 사용할 metric을 계산해주는 compute_metrics는 EvalPrediction 객체를 입력으로 받아 dictionary를 출력하는 함수이다. 이때 EvalPrediction은 일종의 named tuple으로, predictions와 label_ids라는 두 개의 속성을 필수적으로 갖는다. 이름에서 알 수 있듯이, predictions는 모델의 예측값, label_ids는 데이터셋이 제공하는 정답을 의미한다. 이 둘을 여러 개의 metric을 사용해 비교하는 것이 바로 compute_metrics의 역할이라고 할 수 있다. 계산을 완료하면 metric의 이름을 key로, 그 값을 value로 하는 dictionary를 반환해야 한다.

다음은 compute_metrics를 작성한 예시이다.

import numpy as np

from datasets import load_metric

from transformers import TrainingArguments, Trainer

# metric들을 가져오기

accuracy_metric = load_metric("accuracy")

f1_metric = load_metric("f1")

def compute_metrics(eval_pred):

predictions, label_ids = eval_pred

# predictions, label_ids = eval_pred.predictions, eval_pred.label_ids

# 와 같이 접근할 수도 있다.

preds = predictions.argmax(axis=1)

accuracy = accuracy_metric.compute(predictions=preds, references=label_ids)

f1 = f1_metric.compute(predictions=preds, references=label_ids, average="weighted")

return {

"accuracy": accuracy["accuracy"],

"f1": f1["f1"],

}

커스템 모델 사용하기

Trainer API는 transformers 모델을 훈련시키는 것에 최적화되어 있지만, 사용자가 PyTorch로 구현한 커스텀 모델을 훈련시킬 때도 사용할 수 있다. Trainer API documentation에서는 커스텀 모델을 사용할 시 주의해야 할 점들을 언급하고 있다.

- 모델은 항상 tuple이나 ModelOutput(또는 그 자식 클래스)를 리턴해야 한다.

- 모델은

labels argument가 주어질 경우, loss를 계산하여 (모델이 tuple을 리턴할 경우) tuple의 첫 번째 원소로 리턴해야 한다.

- label을 여러 개 사용하는 경우, Trainer의

label_names 매개변수로 label의 이름을 담은 list를 넣어주어야 하며 여러 개의 label 중 이름이 그냥 label인 것은 있으면 안된다.

GPT 파인튜닝하기

이제 허깅페이스 Trainer 사용법은 배웠으니, 실제 LLM을 훈련해보자. GPT-2를 IMDb 영화리뷰 데이터셋에서 fine-tuning하여, 작성된 영화리뷰가 영화를 좋게 평가하는지, 나쁘게 평가하는지 분류하는 간단한 작업을 수행하도록 해본다.

라이브러리 설치

%%capture

!pip install -U datasets transformers accelerate

필요한 패키지들을 설치해준다. Colab 기준으로 위 패키지들은 이미 설치되어 있지만, 현재(2024년 5월 15일 기준) 버전 문제인지 이 작업을 해주지 않으면 훈련이 되지 않는다. 이외에도 사용환경에 따라 코드를 실행하면서 패키지가 설치되어 있지 않다고 나올 경우 pip으로 설치해주면 된다.

모델과 토크나이저 불러오기

from transformers import AutoTokenizer, AutoModelForSequenceClassification

model_ckpt = "openai-community/gpt2"

tokenizer = AutoTokenizer.from_pretrained(model_ckpt)

model = AutoModelForSequenceClassification.from_pretrained(model_ckpt)

허깅페이스 허브에서 GPT-2를 찾아서 임포트해주었다. 링크에서 Use in Transformers 버튼을 눌러 간편하게 불러올 수 있다.

데이터셋 불러오기

from datasets import load_dataset

dataset = load_dataset("stanfordnlp/imdb")

ds_train = dataset['train'].shuffle().select(range(10000))

ds_test = dataset['test'].shuffle().select(range(2500))

IMDb 데이터셋을 가져온다. 데이터셋 또한 허깅페이스 허브에서 쉽게 불러올 수 있도록 제공하고 있다. (링크) 실제 데이터셋은 train과 test set이 각각 25000개의 데이터로 이루어져 있지만, 빠른 학습을 위해서 각각 10000개와 2500개만 사용하겠다.

Metric 정의하기

import numpy as np

from datasets import load_metric

from transformers import TrainingArguments, Trainer

accuracy_metric = load_metric("accuracy")

f1_metric = load_metric("f1")

def compute_metrics(eval_pred):

predictions, label_ids = eval_pred.predictions, eval_pred.label_ids

predictions = predictions.argmax(axis=1)

accuracy = accuracy_metric.compute(predictions=predictions, references=label_ids)

f1 = f1_metric.compute(predictions=predictions, references=label_ids, average="weighted")

return {

"accuracy": accuracy["accuracy"],

"f1": f1["f1"],

}

Evaluation 시 사용할 metric들을 지정해주기 위해서 compute_metrics 함수를 정의해준다. 여기에서는 accuracy와 F1 score를 사용한다. 함수가 형식에 잘 맞는지 주의해야 한다.

패딩 토큰 지정하기, Data Collator 정의하기

from transformers import DataCollatorWithPadding

model.config.pad_token_id = tokenizer.eos_token_id

tokenizer.pad_token_id = tokenizer.eos_token_id

data_collator = DataCollatorWithPadding(tokenizer=tokenizer, max_length=256)

배치를 만들어서 길이가 다른 여러 개의 시퀀스를 한꺼번에 처리하려면 패딩이 이루어져야 한다. 이를 수행하기 위한 data_collator를 정의한다. 또한, model과 tokenizer에게 패딩 토큰이 무엇인지를 알려줘야 하는데, 일반적으로 위와 같이 EOS(end of sequence) 토큰과 동일하게 지정해준다.

Trainer 정의하기

이제 정의한 변수들을 모두 모아 Trainer를 정의해줄 차례이다.

from transformers import Trainer, TrainingArguments

training_arguments = TrainingArguments(

output_dir='./results',

evaluation_strategy="epoch",

num_train_epochs=3,

per_device_train_batch_size=16,

per_device_eval_batch_size=32,

learning_rate=3e-5,

logging_strategy="epoch",

load_best_model_at_end=True,

save_strategy="epoch",

metric_for_best_model="accuracy",

)

trainer = Trainer(

model=model,

train_dataset=ds_train,

data_collator=data_collator,

eval_dataset=ds_test,

args=training_arguments,

compute_metrics=compute_metrics,

)

배웠던대로 TrainingArguments와 Trainer를 차례대로 정의해주고, 필요한 매개변수들을 하나씩 넣어주자. 꼭 이 글에 있는대로 할 필요 없이, 인자들을 하나씩 바꿔보거나 다른 매개변수들을 넣어보는 식으로 코드를 바꿔보면 이해에 도움이 될 것이다.

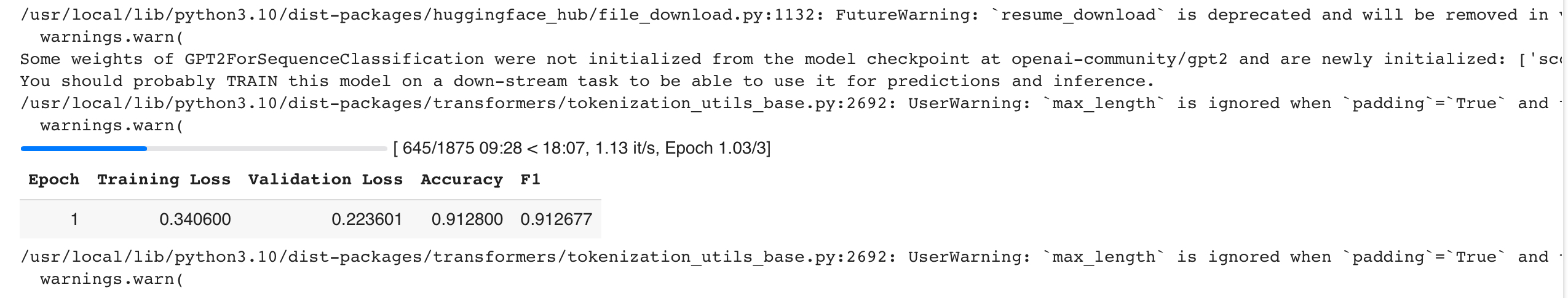

훈련하기

trainer.train()

위와 같이 훈련이 잘 진행되는 것을 확인할 수 있다.

참고문헌

”Hugging Face”, Wikipedia

Trainer API documentation

# 데이터 과학자들이 숫자 42를 좋아하는 이유

Github transformers EvalPrediction 소스코드

transformers.utils.ModelOutput documentation